Example 6.18 [Student $t$-distribution].

Consider the simultaneous measurement ${\mathsf M}_{L^\infty ({\mathbb R} \times {\mathbb R}_+)}$ $({\mathsf O}_G^n = ({\mathbb R}^n, {\mathcal B}_{\mathbb R}^n, {{{G}}^n}) ,$ $S_{[(\mu, \sigma)]})$ in $L^\infty ({\mathbb R} \times {\mathbb R}_+)$. Thus, we consider that $\Omega = {\mathbb R} \times {\mathbb R}_+$, $X={\mathbb R}^n$. Put $\Theta={\mathbb R}$ with the semi-distance $d_\Theta^x (\forall x \in X)$ such that

where ${\overline{\sigma}'(x)}=\sqrt{\frac{n}{n-1}}\overline{\sigma}(x)$. The quantity $\pi:\Omega(={\mathbb R} \times {\mathbb R}_+)

\to

\Theta(={\mathbb R})$ is defined by

Also, define the estimator $E:X(={\mathbb R}^n) \to \Theta(={\mathbb R})$ such that

Define the null hypothesis $H_N$ $(\subseteq

\Theta=

{\mathbb R} )

)$ such that

Thus, for any $ \omega=(\mu_0, \sigma ) (\in \Omega= {\mathbb R} \times {\mathbb R}_+ )$, we see that

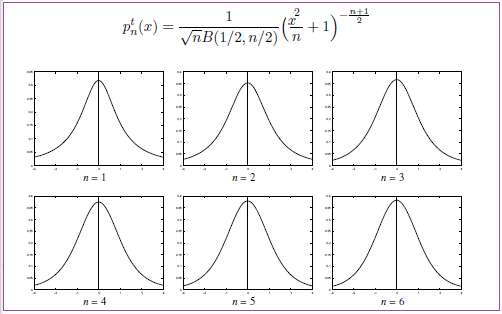

where $p^t_{n-1}$ is the t-distribution with $n-1$ degrees of freedom. Solving the equation $

1-\alpha

=

\int_{-\eta^\alpha_{\omega}}^{\eta^\alpha_{\omega}

}

p^t_{n-1}(x)

dx

$, we get

That is,

=

&

1-

\int_{-\eta}^{\eta

}

p^t_{n-1}(x)

dx

\tag{6.80}

\end{align}

6.6.2: Confidence interval

Our present problem is as follows

Then, find the confidence interval ${D}_{x}^{1- \alpha; \Theta}( \subseteq \Theta)$ (which {does not} depend on $\sigma$) such that

| $\bullet$ | the probability that $\mu \in {D}_{x}^{1- \alpha; \Theta}$ is more than $1-\alpha$ |

Here, the more the confidence interval ${D}_{x}^{1- \alpha; \Theta}$ is small, the more it is desirable.

Therefore, for any $x$ $(\in X)$, we get $D_x^{{1 - \alpha, \Theta }}$( the $({1 - \alpha })$-confidence interval of $x$ ) as follows:

\begin{align} D_x^{{1 - \alpha }} & = \{ \pi({\omega}) (\in \Theta) : \omega \in \Omega, \;\; d^x_\Theta (E(x), \pi(\omega ) ) \le \delta^{1 - \alpha }_{\omega } \} \nonumber \\ & = \{ \mu \in \Theta(={\mathbb R}) \;:\; \overline{\mu}(x) - \frac{{\overline{\sigma}'(x)}}{\sqrt{n}} t(\alpha/2) \le \mu \le \overline{\mu}(x) + \frac{{\overline{\sigma}'(x)}}{\sqrt{n}} t(\alpha/2) \} \tag{6.81} \end{align} \begin{align} D_x^{{1 - \alpha }, \Omega} & = \{ {\omega} =(\mu, \sigma ) (\in \Omega) : \omega \in \Omega, \;\; d^x_\Theta (E(x), \pi(\omega ) ) \le \delta^{1 - \alpha }_{\omega } \} \nonumber \\ & = \{ {\omega} =(\mu, \sigma ) (\in \Omega) \;:\; \overline{\mu}(x) - \frac{{\overline{\sigma}'(x)}}{\sqrt{n}} t(\alpha/2) \le \mu \le \overline{\mu}(x) + \frac{{\overline{\sigma}'(x)}}{\sqrt{n}} t(\alpha/2) \} \\ & \tag{6.82} \end{align} 6.6.3: Statistical hypothesis testin[null hypothesis$H_N=\{\mu_0\} ( \subseteq \Theta = {\mathbb R}$)]Our present problem was as follows

Consider the simultaneous normal measurement ${\mathsf M}_{L^\infty ({\mathbb R} \times {\mathbb R}_+)}$ $({\mathsf O}_G^n = ({\mathbb R}^n, {\mathcal B}_{\mathbb R}^n, {{{G}}^n}) ,$ $S_{[(\mu, \sigma)]})$. Assume that

\begin{align} \mu = \mu_0 \end{align}That is,assume the null hypothesis $H_N$ such that

\begin{align} H_N=\{ \mu_0 \} (\subseteq \Theta= {\mathbb R} ) ) \end{align}Let $0 < \alpha \ll 1$.

Then, find the rejection region ${\widehat R}_{{H_N}}^{\alpha; \Theta}( \subseteq \Theta)$ (which does not depend on $\sigma$) such that

| $\bullet$ | the probability that a measured value$x(\in{\mathbb R}^n )$ obtained by ${\mathsf M}_{L^\infty ({\mathbb R} \times {\mathbb R}_+)}$ $({\mathsf O}_G^n = ({\mathbb R}^n, {\mathcal B}_{\mathbb R}^n, {{{G}}^n}) ,$ $S_{[(\mu_0, \sigma)]})$ satisfies

\begin{align}

E(x) \in {\widehat R}_{{H_N}}^{\alpha; \Theta}

\end{align}

is less than $\alpha$.

|

The rejection region${\widehat R}_{H_N}^{\alpha, \Theta}$( $(\alpha)$-rejection region of null hypothesis $H_N(=\{\mu_0\})$ ) is calculated as follows: \begin{align} {\widehat R}_{H_N}^{\alpha, \Theta} & = \bigcap_{\omega =(\mu, \sigma ) \in \Omega (={\mathbb R} \times {\mathbb R}_+) \mbox{ such that } \pi(\omega)= \mu \in {H_N}(=\{\mu_0\})} \{ E(x) (\in \Theta) : \;\; d^x_\Theta (E(x), \pi(\omega ) ) \ge \eta^\alpha_{\omega } \} \nonumber \\ & = \{\overline{\mu}(x) \in \Theta(={\mathbb R}) \;:\; \frac{ |\overline{\mu}(x)- \mu_0 |}{ {{\overline{\sigma}'(x)}/\sqrt{n}} } \ge t(\alpha/2) \} \nonumber \\ & = \{\overline{\mu}(x) \in \Theta(={\mathbb R}) \;:\; \mu_0 \le \overline{\mu}(x) - \frac{{\overline{\sigma}'(x)}}{\sqrt{n}} t(\alpha/2) \mbox{ or } \overline{\mu}(x) + \frac{{\overline{\sigma}'(x)}}{\sqrt{n}} t(\alpha/2) \le \mu_0 \} \\ & \tag{6.83} \end{align} Also \begin{align} {\widehat R}_{H_N}^{\alpha,X} & = \bigcap_{\omega =(\mu, \sigma ) \in \Omega (={\mathbb R} \times {\mathbb R}_+) \mbox{ such that } \pi(\omega)= \mu \in {H_N}(=\{\mu_0\})} \{ x \in X : \;\; d^x_\Theta (E(x), \pi(\omega ) ) \ge \eta^\alpha_{\omega } \} \nonumber \\ & = \{ x \in X ={\mathbb R}^n \;:\; \frac{ |\overline{\mu}(x)- \mu_0 |}{ {{\overline{\sigma}'(x)}/\sqrt{n}} } \ge t(\alpha/2) \} \nonumber \\ & = \{ x \in X ={\mathbb R}^n \;:\; \mu_0 \le \overline{\mu}(x) - \frac{{\overline{\sigma}'(x)}}{\sqrt{n}} t(\alpha/2) \mbox{ or } \overline{\mu}(x) + \frac{{\overline{\sigma}'(x)}}{\sqrt{n}} t(\alpha/2) \le \mu_0 \} \\ & \tag{6.84} \end{align}

Our present problem was as follows

Consider the simultaneous normal measurement ${\mathsf M}_{L^\infty ({\mathbb R} \times {\mathbb R}_+)}$ $({\mathsf O}_G^n = ({\mathbb R}^n, {\mathcal B}_{\mathbb R}^n, {{{G}}^n}) ,$ $S_{[(\mu, \sigma)]})$. Assume that

\begin{align} \mu \in (-\infty , \mu_0] \end{align}That is,assume the null hypothesis $H_N$ such that

\begin{align} H_N=(-\infty , \mu_0] (\subseteq \Theta= {\mathbb R} ) ) \end{align}

Let $0 < \alpha \ll 1$.

Then, find the rejection region ${\widehat R}_{{H_N}}^{\alpha; \Theta}( \subseteq \Theta)$ (which does not depend on $\sigma$) such that

| $\bullet$ | the probability that a measured value$x(\in{\mathbb R}^n )$ obtained by ${\mathsf M}_{L^\infty ({\mathbb R} \times {\mathbb R}_+)}$ $({\mathsf O}_G^n = ({\mathbb R}^n, {\mathcal B}_{\mathbb R}^n, {{{G}}^n}) ,$ $S_{[(\mu_0, \sigma)]})$ satisfies

\begin{align}

E(x) \in {\widehat R}_{{H_N}}^{\alpha; \Theta}

\end{align}

is less than $\alpha$. |

Here, the more the rejection region ${\widehat R}_{{H_N}}^{\alpha; \Theta}$ is large, the more it is desirable.

Since the null hypothesis $H_N$ is assumed as follows:

\begin{align} H_N = (- \infty , \mu_0], \end{align}it suffices to define the semi-distance $d_\Theta^{x} $ in $ \Theta(= {\mathbb R}) $ such that

\begin{align} d_\Theta^x (\theta_1, \theta_2) = \left\{\begin{array}{ll} \frac{|\theta_1-\theta_2|}{{\overline{\sigma}'(x)}/\sqrt{n}} \quad & ( \forall \theta_1, \theta_2 \in \Theta={\mathbb R} \mbox{ such that } \mu_0 \le \theta_1, \theta_2 ) \\ \frac{\max \{ \theta_1, \theta_2 \}-\mu_0}{{\overline{\sigma}'(x)}/\sqrt{n}} \quad & ( \forall \theta_1, \theta_2 \in \Theta={\mathbb R} \mbox{ such that } \min \{ \theta_1, \theta_2 \} \le \mu_0 \le \max \{ \theta_1, \theta_2 \} ) \\ 0 & ( \forall \theta_1, \theta_2 \in \Theta={\mathbb R} \mbox{ such that } \theta_1, \theta_2 \le \mu_0 ) \end{array}\right. \\ & \tag{6.85} \end{align}for any $x \in X={\mathbb R}^n$.

Then,$(\alpha)$-rejection region${\widehat R}_{H_N}^{\alpha, \Theta}$ is calculated as follows.

\begin{align} {\widehat R}_{H_N}^{\alpha, \Theta} & = \bigcap_{\omega =(\mu, \sigma ) \in \Omega (={\mathbb R} \times {\mathbb R}_+) \mbox{ such that } \pi(\omega)= \mu \in {H_N}(=(- \infty , \mu_0])} \{ E(x) (\in \Theta) : \;\; d^x_\Theta (E(x), \pi(\omega ) ) \ge \eta^\alpha_{\omega } \} \nonumber \\ & = \{\overline{\mu}(x) \in \Theta(={\mathbb R}) \;:\; \mu_0 \le \overline{\mu}(x) - \frac{{\overline{\sigma}'(x)}}{\sqrt{n}} t(\alpha) \} \tag{6.86} \end{align} Also, \begin{align} {\widehat R}_{H_N}^{\alpha, X} & = \bigcap_{\omega =(\mu, \sigma ) \in \Omega (={\mathbb R} \times {\mathbb R}_+) \mbox{ such that } \pi(\omega)= \mu \in {H_N}(=(- \infty , \mu_0])} \{ x (\in X ={\mathbb R}^n) \; :\; d^x_\Theta (E(x), \pi(\omega ) ) \ge \eta^\alpha_{\omega } \} \nonumber \\ & = \{ x (\in X ={\mathbb R}^n) \; :\; \mu_0 \le \overline{\mu}(x) - \frac{{\overline{\sigma}'(x)}}{\sqrt{n}} t(\alpha) \} \tag{6.87} \end{align}Remark 6.25 There are many ideas of statistical hypothesis testing. The most natural idea is the likelihood-ratio, which is discussed in

| [1]: | S. Ishikawa, "Mathematical Foundations of Measurement Theory,"Keio University Press Inc. 2006. ( download free) |

| [2]: | S. Ishikawa, "A Measurement Theoretical Foundation of Statistics" Applied Mathematics, Vol. 3, No. 3, 2012, pp. 283-292. ( download free) |

Also, we think that the arguments concerning "null hypothesis vs. alternative hypothesis" and "one-sided test and two-sided test" are practical and not theoretical.