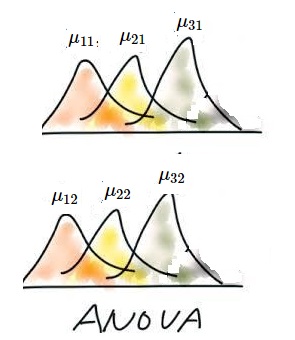

For example, consider sa follows.

| $(\sharp_{11})$ | A data: $x_{111}, x_{112}, x_{113},..., x_{11n}$ is obtained from the normal distribution $N(\mu_{11}, \sigma)$ |

| $(\sharp_{21})$ | A data: $x_{211}, x_{212}, x_{213},..., x_{21n}$ is obtained from the normal distribution $N(\mu_{21}, \sigma)$ |

| $(\sharp_{31})$ | A data: $x_{311}, x_{312}, x_{313},..., x_{31n}$ is obtained from the normal distribution $N(\mu_{31}, \sigma)$ |

| $(\sharp_{12})$ | A data: $x_{121}, x_{122}, x_{123},..., x_{12n}$ is obtained from the normal distribution $N(\mu_{12}, \sigma)$ |

| $(\sharp_{22})$ | A data: $x_{221}, x_{222}, x_{223},..., x_{22n}$ is obtained from the normal distribution $N(\mu_{22}, \sigma)$ |

| $(\sharp_{32})$ | A data: $x_{321}, x_{322}, x_{323},..., x_{32n}$ is obtained from the normal distribution $N(\mu_{32}, \sigma)$ |

How should we answer it?

$\S$7.3.3: Null hypothesis:$\quad \mu_{ \Large\mbox{${\cdot}$} 1}=\mu_{ \bullet 2 }=\cdots =\mu_{ \bullet b}=\mu_{\bullet \bullet }$

Our present problem is as follows

Then, find the largest ${\widehat R}_{{H_N}}^{\alpha; \Theta}( \subseteq \Theta)$(independent of $\sigma$) such that

| $(C_1)':$ | the probability that a measured value $x(\in{\mathbb R}^{abn} )$ obtained by $ {\mathsf M}_{L^\infty ({\mathbb R}^{ab} \times {\mathbb R}_+ )} ( {\mathsf O}_G^{{{abn}}} = (X(\equiv {\mathbb R}^{{{abn}}}), {\mathcal B}_{\mathbb R}^{{{abn}}}, {{{G}}^{{{abn}}}} ), S_{[(\mu=(\mu_{ij}\;|\; i=1,2,\cdots, a, j=1,2,\cdots,b ), \sigma )]} ) $ satisfies that \begin{align} E(x) \in {\widehat R}_{{H_N}}^{\alpha; \Theta} \end{align} is less than $\alpha$. |

Since $a$ and $b$ have the same role, by the similar way of $\S$7.3.2, we can easily solve Problem 7.4.

$\S$7.3.4 Null hypothesis:$\quad (\alpha \beta)_{ij}=0$ ($\forall i=1,2, \ldots, a,\;j=1,2, \ldots, b$ )

Now, put \begin{align} \Theta = {\mathbb R}^{ab} \tag{7.50} \end{align}And,define the system quantity$\pi : \Omega \to \Theta $ by

\begin{align} \Omega = {\mathbb R}^{ab} \times {\mathbb R}_+ \ni \omega =( (\mu_{ij})_{i=1,2, \ldots, a,\;\;j=1,2, \ldots, b } , \sigma) \mapsto \pi(\omega) = ((\alpha \beta)_{ij})_{i=1,2, \ldots, a,\;\;j=1,2, \ldots, b } \in \Theta = {\mathbb R}^{ab} \\ & \tag{7.51} \end{align}Here,recall:

\begin{align} (\alpha \beta)_{ij} =\mu_{ij}-\mu_{i \bullet} -\mu_{\bullet j }+\mu_{\bullet \bullet } \tag{7.52} \end{align}Also,the estimator $E: X(={\mathbb R}^{abn}) \to \Theta(={\mathbb R}^{ab} )$ is defined by

\begin{align} & E( (x_{ijk})_{i=1,...,a, \; j=1,2,...b, \; k=1,2,...,n }) \nonumber \\ = & \Big(\frac{ \sum_{k=1}^n x_{ijk}}{n} - \frac{ \sum_{j=1}^b \sum_{k=1}^n x_{ijk}}{bn} - \frac{ \sum_{j=1}^b\sum_{k=1}^n x_{ijk}}{an} + \frac{\sum_{i=1}^a \sum_{j=1}^b \sum_{k=1}^n x_{ijk}}{abn} \Big)_{i=1,2,\ldots, a \; j=1,2,...b, } \nonumber \\ = & \Big( x_{i j \bullet } - x_{i \bullet \bullet } - x_{\bullet j \bullet } + x_{\bullet \bullet \bullet } \Big)_{i=1,2,\ldots, a \; j=1,2,...b,} \tag{7.53} \end{align}Our present problem is as follows

Problem 7.5 [The two way ANOVA].

Consider the parallel simultaneous normal measurement:

\begin{align} {\mathsf M}_{L^\infty ({\mathbb R}^{ab} \times {\mathbb R}_+ )} ( {\mathsf O}_G^{{{abn}}} = (X(\equiv {\mathbb R}^{{{abn}}}), {\mathcal B}_{\mathbb R}^{{{abn}}}, {{{G}}^{{{abn}}}} ), S_{[(\mu=(\mu_{ij}\;|\; i=1,2,\cdots, a, j=1,2,\cdots,b ), \sigma )]} ) \end{align}The null hypothesis $H_N( \subseteq \Theta ={\mathbb R}^{ab})$ is defined by

\begin{align} H_N & = \{ ((\alpha \beta)_{ij})_{i=1,2, \ldots, a,\;\;j=1,2, \ldots, b } \in \Theta = {\mathbb R}^{ab} \;:\; (\alpha \beta)_{ij}=0, (\forall {i=1,2, \ldots, a,\;\;j=1,2, \ldots, b } ) \} \tag{7.54} \end{align}That is,

\begin{align} (\alpha \beta)_{ij} =\mu_{ij}-\mu_{i \bullet} -\mu_{\bullet j }+\mu_{\bullet \bullet }=0 \quad (i=1,2, \cdots, a, \quad j=1,2, \cdots,b ) \tag{7.55} \end{align}Let $0 < \alpha \ll 1$.

Then, find the largest ${\widehat R}_{{H_N}}^{\alpha; \Theta}( \subseteq \Theta)$(independent of $\sigma$) such that

| $(D1):$ | the probability that a measured value $x(\in{\mathbb R}^{abn} )$ obtained by $ {\mathsf M}_{L^\infty ({\mathbb R}^{ab} \times {\mathbb R}_+ )} ( {\mathsf O}_G^{{{abn}}} = (X(\equiv {\mathbb R}^{{{abn}}}), {\mathcal B}_{\mathbb R}^{{{abn}}}, {{{G}}^{{{abn}}}} ), S_{[(\mu=(\mu_{ij}\;|\; i=1,2,\cdots, a, j=1,2,\cdots,b ), \sigma )]} ) $ satisfies that \begin{align} E(x) \in {\widehat R}_{{H_N}}^{\alpha; \Theta} \end{align} is less than $\alpha$. |

Answer.

Now,put \begin{align} & \| \theta^{(1)}- \theta^{(2)} \|_\Theta = \sqrt{ \sum_{i=1}^a \sum_{j=1}^b \Big(\theta_{ij}^{(\ell)} - \theta_{ij}^{(\ell)} \Big)^2 } \tag{7.56} \\ & \qquad (\forall \theta^{(\ell)} =( \theta_{ij}^{(\ell)})_{i=1,2, \ldots, a,\;\;j=1,2, \ldots, b } \in {\mathbb R}^{ab}, \; \ell=1,2 ) \nonumber \end{align}and, define the semi-distance $d_\Theta^x$ in $\Theta$ by

\begin{align} & d_\Theta^x (\theta^{(1)}, \theta^{(2)}) = \frac{\|\theta^{(1)}- \theta^{(2)} \|_\Theta}{ \sqrt{{\overline{SS}}(x)} } \qquad (\forall \theta^{(1)}, \theta^{(2)} \in \Theta, \forall x \in X ) \tag{7.57} \end{align}Then,

\begin{align} & E( (x_{ijk}-\mu_{ij})_{i=1,...,a, \; j=1,2,...b, \; k=1,2,...,n }) \nonumber \\ = & \Big(\frac{ \sum_{k=1}^n (x_{ijk}-\mu_{ij})}{n} - \frac{ \sum_{j=1}^b \sum_{k=1}^n (x_{ijk}-\mu_{ij})}{bn} \nonumber \\ & \qquad - \frac{ \sum_{j=1}^b\sum_{k=1}^n (x_{ijk}-\mu_{ij})}{an} + \frac{\sum_{i=1}^a \sum_{j=1}^b \sum_{k=1}^n (x_{ijk}-\mu_{ij})}{abn} \Big)_{i=1,2,\ldots, a \; j=1,2,...b, } \nonumber \\ = & \Big( (x_{i j \bullet} - \mu_{ij}) - (x_{i \bullet \bullet }-\mu_{i \bullet}) - (x_{\bullet j \bullet }- \mu_{\bullet j}) + (x_{\bullet \bullet \bullet }- \mu_{\bullet \bullet }) \Big)_{i=1,2,\ldots, a \; j=1,2,...b,} \nonumber \\ = & \Big( x_{i j \bullet } - x_{i \bullet \bullet } - x_{\bullet j \bullet } + x_{\bullet \bullet \bullet } \Big)_{i=1,2,\ldots, a \; j=1,2,...b} \qquad (\mbox{Remark:null hypothesis }(\alpha \beta)_{ij}=0 ) \\ & \tag{7.58} \end{align} Therefore, \begin{align} E( (x_{ijk})_{i=1,...,a, \; j=1,2,...b, \; k=1,2,...,n }) = E( (x_{ijk}-\mu_{ij})_{i=1,...,a, \; j=1,2,...b, \; k=1,2,...,n }) \tag{7.59} \end{align}Thus, for each $i=1,...,a, \; j=1,2,...b,$

\begin{align} & E_{ij} (x_{ijk} -\mu_{ij}) \nonumber \\ = & \frac{ \sum_{k=1}^n (x_{ijk}-\mu_{ij})}{n} - \frac{ \sum_{j=1}^b \sum_{k=1}^n (x_{ijk}-\mu_{ij})}{bn} - \frac{ \sum_{j=1}^b\sum_{k=1}^n (x_{ijk}-\mu_{ij})}{an} \nonumber \\ & \qquad \qquad + \frac{\sum_{i=1}^a \sum_{j=1}^b \sum_{k=1}^n(x_{ijk}-\mu_{ij})}{abn} \nonumber \\ = & E_{ij}(x)- (\alpha\beta)_{ij} \nonumber \\ = & x_{i j \bullet } - x_{i \bullet \bullet } - x_{\bullet j \bullet } + x_{\bullet \bullet \bullet } - (\alpha\beta)_{ij} \tag{7.60} \end{align} And, we see: \begin{align} & \| E(x) - \pi (\omega )\|^2_\Theta \nonumber \\ = & || \Big( E_{ij}(x) - (\alpha \beta)_{ij} \Big)_{i=1,2,\ldots, a \; j=1,2,...b } ||_\Theta^2 \tag{7.61} \end{align}Recalling that the null hypothesis $H_N$ (i.e., $(\alpha \beta)_{ij}=0$ $(\forall i=1,2,\ldots, a, \; j=1,2, \ldots, b )$ ), we see

\begin{align} = & \sum_{i=1}^a\sum_{j=1}^b( x_{i j \bullet } - x_{i \bullet \bullet } - x_{\bullet j \bullet } + x_{\bullet \bullet \bullet } )^2 \tag{7.62} \end{align}Thus, for each $ \omega=(\mu, \sigma ) (\in \Omega= {\mathbb R}^{ab} \times {\mathbb R} )$, define the positive real $\eta^\alpha_{\omega}$ $(> 0)$ such that

\begin{align} \eta^\alpha_{\omega} = \inf \{ \eta > 0: [G(E^{-1} ( {{ Ball}^C_{d_\Theta^{x}}}(\pi(\omega) ; \eta))](\omega ) \ge \alpha \} \tag{7.63} \end{align}Recalling the null hypothesis$H_N$ (i.e., $(\alpha \beta)_{ij}=0$ $(\forall i=1,2,\ldots, a, \; j=1,2, \ldots, b )$ ),calculate the $\eta^\alpha_{\omega}$as follows.

\begin{align} & E^{-1}({{ Ball}^C_{d_\Theta^{x} }}(\pi(\omega) ; \eta )) =\{ x \in X = {\mathbb R}^{abn} \;:\; d_\Theta^x (E(x), \pi(\omega )) > \eta \} \nonumber \\ = & \{ x \in X = {\mathbb R}^{abn} \;:\; \frac{ abn \sum_{i=1}^a\sum_{j=1}^b( x_{i j \bullet } - x_{i \bullet \bullet } - x_{\bullet j \bullet } + x_{\bullet \bullet \bullet } )^2 }{ \sum_{i=1}^a \sum_{j=1}^b\sum_{k=1}^n (x_{ijk} - x_{ij \bullet})^2 } > \eta^2 \} \tag{7.64} \end{align}Thus, for any $\omega =((\mu_{ij})_{i=1,2,\ldots,a, \;j=1,2,\ldots,b},\;, \sigma) \in \Omega ={\mathbb R}^{ab } \times {\mathbb R}_{+}$ such that $\pi( \omega )\in H_N (\subseteq {\mathbb R}^{ab} ) $ (i.e., $(\alpha \beta)_{ij}=0$ $(\forall i=1,2,\ldots, a, \; j=1,2, \ldots, b )$ ), we see:

\begin{align} & [{{{G}}}^{abn} ( E^{-1}({{ Ball}^C_{d_\Theta^{x} }}(\pi(\omega) ; \eta )) ) (\omega) \nonumber \\ = & \frac{1} {({ {\sqrt{2 \pi } \sigma} })^{abn}} \underset{ E^{-1}({{ Ball}^C_{d_\Theta^{x} }}(\pi(\omega) ; \eta )) } {\int \cdots \int} \exp[- \frac{ \sum_{i=1}^a \sum_{j=1}^b \sum_{k=1}^n (x_{ijk} - \mu_{ij} )^2 }{2 \sigma^2} ] \times_{k=1}^n \times_{j=1}^b \times_{i=1}^a d{x_{ijk} } \nonumber \\ = & \frac{1} {({ {\sqrt{2 \pi } \sigma} })^{abn}} \underset{ \{x \in X \;:\; d_\Theta^x ( E(x), \pi(\omega ) \ge \eta \} } {\int \cdots \int} \exp[- \frac{ \sum_{i=1}^a \sum_{j=1}^b \sum_{k=1}^n (x_{ijk} - \mu_{ij} )^2 }{2 \sigma^2} ] \times_{k=1}^n \times_{j=1}^b \times_{i=1}^a d{x_{ijk} } \nonumber \\ = & \frac{1} {({ {\sqrt{2 \pi }} })^{abn}} \underset{ \frac{ \sum_{i=1}^a\sum_{j=1}^b( x_{i j \bullet } - x_{i \bullet \bullet } - x_{\bullet j \bullet } + x_{\bullet \bullet \bullet } )^2 }{ \sum_{i=1}^a \sum_{j=1}^b\sum_{k=1}^n (x_{ijk} - x_{ij \bullet})^2 } > \frac{\eta^2}{abn} } {\int \cdots \int} \exp[- \frac{ \sum_{i=1}^a \sum_{j=1}^b \sum_{k=1}^n (x_{ijk} )^2 }{2} ] \times_{k=1}^n \times_{j=1}^b \times_{i=1}^a d{x_{ijk} } \nonumber \\ = & \frac{1} {({ {\sqrt{2 \pi }} })^{abn}} \underset{ \frac{ \frac{ \sum_{i=1}^a\sum_{j=1}^b( x_{i j \bullet } - x_{i \bullet \bullet } - x_{\bullet j \bullet } + x_{\bullet \bullet \bullet } )^2}{(a-1)(b-1)} }{ \frac{\sum_{i=1}^a \sum_{j=1}^b\sum_{k=1}^n (x_{ijk} - x_{ij \bullet})^2}{ ab(n-1) } } > \frac{\eta^2(ab(n-1))}{abn(a-1)(b-1)} } {\int \cdots \int} \exp[- \frac{ \sum_{i=1}^a \sum_{j=1}^b \sum_{k=1}^n (x_{ijk} )^2 }{2} ] \times_{k=1}^n \times_{j=1}^b \times_{i=1}^a d{x_{ijk} } \\ & \tag{7.65} \end{align}| $(D_2):$ | Then, by the formula of Gauss integrals ( Formula 7.8 (D)( in $\S$7.4)), we see |

where $p_{((a-1)(b-1),ab({{n}}-1)) }^F$ is a probability density function of the $F$-distribution with $((a-1)(b-1),ab(n-1)) $ degrees of freedom.

Hence, it suffices to the following equation:

\begin{align} {\frac{\eta^2 (n-1)}{n (a-1)(b-1)}} ={F_{ab(n-1), \alpha}^{(a-1)(b-1)} } (=\mbox{"$\alpha$-point"}) \tag{7.67} \end{align} thus, we see, \begin{align} (\eta^\alpha_{\omega})^2 = {F_{ab(n-1), \alpha}^{(a-1)(b-1)} } n(a-1)(b-1)/(n-1) \tag{7.68} \end{align}Therefore, we get the $(\alpha)$-rejection region ${\widehat R}_{\widehat{x}}^{\alpha; \Theta}$ (or, ${\widehat R}_{\widehat{x}}^{\alpha; X}$; $H_N =\{((\alpha \beta)_{ij})_{i=1,2, \cdots, a, j=1,2, \cdots, b} \; :\;$ $ (\alpha \beta)_{ij}=0 \; (i=1,2, \cdots, a, j=1,2, \cdots, b)\}( \subseteq \Theta= {\mathbb R}^{ab})$):

\begin{align} {\widehat R}_{{H_N}}^{\alpha; \Theta} & = \bigcap_{\omega =((\mu_{ij})_{i=1}^a{}_{j=1}^b, \sigma ) \in \Omega (={\mathbb R}^a \times {\mathbb R}_+ ) \mbox{ such that } \pi(\omega)= (\alpha \beta)_{ij} \in {H_N}} \{ E({x}) (\in \Theta) : d_\Theta^{x} (E({x}), \pi(\omega)) \ge \eta^\alpha_{\omega } \} \nonumber \\ & = \{ E({x}) (\in \Theta) : \frac{ (\sum_{i=1}^a \sum_{j=1}^b( x_{ij \bullet} - x_{\bullet \bullet \bullet} )^2)/((a-1)(b-1))}{ (\sum_{i=1}^a \sum_{j=1}^b\sum_{k=1}^n (x_{ijk} - x_{ij \bullet})^2) /(ab(n-1)) } \ge {F_{ab(n-1), \alpha}^{(a-1)(b-1)} } \} \tag{7.69} \end{align} Also, \begin{align} & {\widehat R}_{{H_N}}^{\alpha; X}= E^{-1}({\widehat R}_{{H_N}}^{\alpha; \Theta}) = \{ x (\in X) : \frac{ (\sum_{i=1}^a \sum_{j=1}^b( x_{ij \bullet} - x_{\bullet \bullet \bullet} )^2)/((a-1)(b-1))}{ (\sum_{i=1}^a \sum_{j=1}^b\sum_{k=1}^n (x_{ijk} - x_{ij \bullet})^2) /(ab(n-1)) } \ge {F_{ab(n-1), \alpha}^{(a-1)(b-1)} } \} \\ & \tag{7.70} \end{align}

| $\fbox{Note 7.4}$ | It should be noted that the mathematical part is only the (D$_2$). |