6.4.3: Statistical hypothesis testing[null hypothesis$H_N=\{\sigma_0\} \subseteq \Theta = {\mathbb R}_+$]

Then, find the rejection region ${\widehat R}_{{H_N}}^{\alpha; \Theta}( \subseteq \Theta)$ (which may depend on $\mu$) such that

is less that $\alpha$.

Here, the more the rejection region ${\widehat R}_{{H_N}}^{\alpha; \Theta}$ is large, the more it is desirable.

Let $d_{\Theta}^{(1)}$ be as in (6.46).

For any $ \omega=(\mu, {\sigma} ) (\in \Omega= {\mathbb R} \times {\mathbb R}_+ )$, define the positive number $\eta^{\alpha}_{\omega}$ $(> 0)$ such that:

Hence we get the ${\widehat R}_{H_N}^{\alpha, \Theta}$ ( the $(\alpha)$-rejection region of $H_N= \{ \sigma_0 \} \subseteq \Theta ={\mathbb R}_+$ ) as follows:

where $\overline{\sigma}(x) = \Big(

\frac{\sum_{k=1}^n ( x_k -

\overline{\mu}

(x))^2}{n}

\Big)^{1/2}

$.

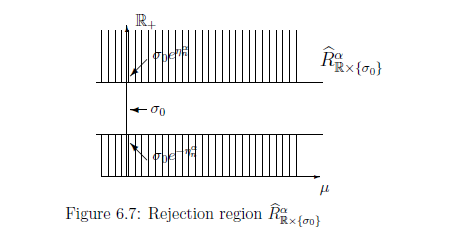

Thus, in a similar way of Remark 6.10, we see that ${\widehat R}_{{\mathbb R} \times \{\sigma_0 \}}^{\alpha}$="the slash part in Figure 6.7", where

Our present problem is as follows.

$\bullet$ the probability that a measured value$x(\in{\mathbb R}^n )$ obtained by ${\mathsf M}_{L^\infty ({\mathbb R} \times {\mathbb R}_+)}$ $({\mathsf O}_G^n = ({\mathbb R}^n, {\mathcal B}_{\mathbb R}^n, {{{G}}^n}) ,$ $S_{[(\mu_0, \sigma)]})$ satisfies that

\begin{align}

E(x) \in {\widehat R}_{{H_N}}^{\alpha; \Theta}

\end{align}

6.4.4: Statistical hypothesis testing[null hypothesis$H_N=(0, \sigma_0] \subseteq \Theta = {\mathbb R}_+$]

Our present problem is as follows.

Consider the simultaneous normal measurement ${\mathsf M}_{L^\infty ({\mathbb R} \times {\mathbb R}_+)}$ $({\mathsf O}_G^n = ({\mathbb R}^n, {\mathcal B}_{\mathbb R}^n, {{{G}}^n}) ,$ $S_{[(\mu, \sigma)]})$. Assume the null hypothesis $H_N$ such that

\begin{align} H_N= (0, \sigma_0 ] (\subseteq \Theta= {\mathbb R} ) ) \end{align} Let $0 < \alpha \ll 1$.Then, find the rejection region ${\widehat R}_{{H_N}}^{\alpha; \Theta}( \subseteq \Theta)$ (which may depend on $\mu$) such that

| $\bullet$ | the probability that a measured value$x(\in{\mathbb R}^n )$ obtained by ${\mathsf M}_{L^\infty ({\mathbb R} \times {\mathbb R}_+)}$ $({\mathsf O}_G^n = ({\mathbb R}^n, {\mathcal B}_{\mathbb R}^n, {{{G}}^n}) ,$ $S_{[(\mu_0, \sigma)]})$ satisfies that \begin{align} E(x) \in {\widehat R}_{{H_N}}^{\alpha; \Theta} \end{align} is less that $\alpha$. |

Here, the more the rejection region ${\widehat R}_{{H_N}}^{\alpha; \Theta}$ is large, the more it is desirable.

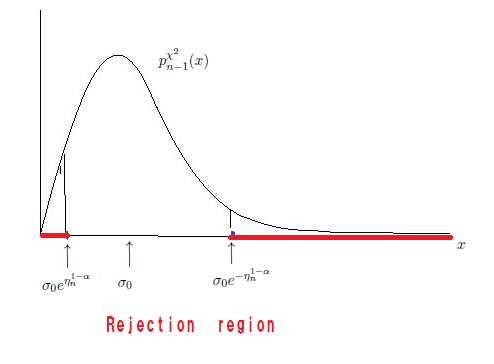

Consider the following semi-distance $d_{\Theta}^{(2)}$ in $\Theta (= {\mathbb R}_+)$:

\begin{align} d_{\Theta}^{(2)}(\sigma_1,\sigma_2) = \left\{\begin{array}{ll} | \int_{\sigma_1}^{\sigma_2} \frac{1}{\sigma} d \sigma | = |\log{\sigma_1} - \log{\sigma_2}| \quad & ( \sigma_0 \le \sigma_1, \sigma_2 ) \\ | \int_{\sigma_0}^{\sigma_2} \frac{1}{\sigma} d \sigma | = |\log{\sigma_0} - \log{\sigma_2}| \quad & ( \sigma_1 \le \sigma_0 \le \sigma_2 ) \\ | \int_{\sigma_0}^{\sigma_1} \frac{1}{\sigma} d \sigma | = |\log{\sigma_0} - \log{\sigma_1}| \quad & ( \sigma_2 \le \sigma_0 \le \sigma_1 ) \\ 0 \quad & ( \sigma_1, \sigma_2 \le \sigma_0 ) \end{array}\right. \tag{6.57} \end{align}For any $ \omega=(\mu, {\sigma} ) (\in \Omega= {\mathbb R} \times {\mathbb R}_+ )$, define the positive number $\eta^\alpha_{\omega}$ $(> 0)$ such that:

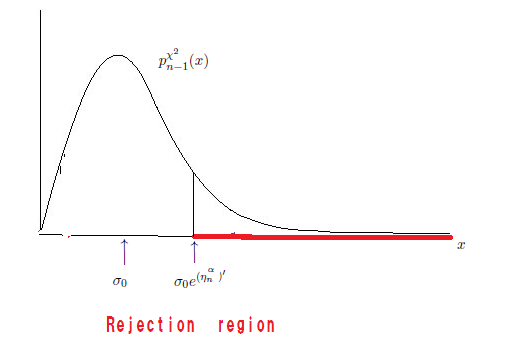

\begin{align} \eta^\alpha_{\omega} = \inf \{ \eta > 0: [F (E^{-1} ( {{ Ball}^C_{d_{\Theta}^{(2)}}}(\omega ; \eta))](\omega ) \le \alpha \} \tag{6.58} \end{align} where \begin{align} {{ Ball}^C_{d_{\Theta}^{(2)}}}(\omega ; \eta ) = {{ Ball}^C_{d_{\Theta}^{(2)}}}((\mu ; {\sigma} ), \eta ) = {\mathbb R} \times [ \sigma e^{\eta}, \infty ) \tag{6.59} \end{align} Then, \begin{align} & E^{-1}( {{ Ball}^C_{d_{\Theta}^{(2)}}}(\omega ; \eta )) = E^{-1} \Big( [ \sigma e^{\eta}, \infty ) \Big) \nonumber \\ = & \{ (x_1, \ldots , x_n ) \in {\mathbb R}^n \;: \; \sigma e^{\eta} \le \overline{\sigma}(x) = \Big( \frac{\sum_{k=1}^n ( x_k - \overline{\mu} (x))^2}{n} \Big)^{1/2} \} \tag{6.60} \end{align} Hence we see, by the Gauss integral (6.7), that \begin{align} & [{{{G}}}^n (E^{-1}({{ Ball}^C_{d_{\Theta}^{(2)}}}(\omega; \eta ))] (\omega) \nonumber \\ = & \frac{1}{({{\sqrt{2 \pi }{\sigma}{}}})^n} \underset{{ \sigma_0 e^{\eta} \le \overline{\sigma}(x) }}{\int \cdots \int} \exp[{}- \frac{\sum_{k=1}^n ({}{x_k} - {}{\mu} )^2 } {2 {\sigma}^2} {}] d {}{x_1} d {}{x_2}\cdots dx_n \nonumber \nonumber \\ = & \int_{\frac{{n} e^{ 2 \eta}\sigma^2}{\sigma^2}}^\infty p^{\chi^2}_{n-1} (x ) dx = \int_{{n} e^{ 2 \eta}}^\infty p^{\chi^2}_{n-1} (x ) dx \tag{6.61} \end{align}Solving the following equation, define the $(\eta^\alpha_{n})' (>0)$ such that

\begin{align} \alpha = \int_{{n} e^{2 (\eta^\alpha_{n})'}}^{\infty} p^{\chi^2}_{n-1} (x ) dx \tag{6.62} \end{align}Hence we get the ${\widehat R}_{H_N}^{\alpha, \Theta}$ ( the $(\alpha)$-rejection region of $H_N= (0, \sigma_0] $ ) as follows:

\begin{align} {\widehat R}_{H_N}^{\alpha, \Theta} & = {\widehat R}_{ (0, \sigma_0 ] }^{\alpha, \Theta} = \bigcap_{\pi(\omega ) \in (0, \sigma_0 ]} \{ {E(x)} (\in \Theta = {\mathbb R}_+) : d^{(2)}_\Theta (E(x), \pi(\omega) ) \ge \eta^\alpha_{\omega} \} \nonumber \\ & = \bigcap_{\pi(\omega ) \in (0, \sigma_0 ]} \{ {E(x)} (\in \Theta) : d^{(2)}_\Theta (E(x), \pi(\omega)) \ge (\eta^\alpha_{n})' \} \nonumber \\ & = \{ {\sigma}(= \overline{\sigma}(x) ) \in {\mathbb R}_+ \;: \; {\sigma_0} e^{(\eta^\alpha_{n})' } \le \overline{\sigma}(x) \} \tag{6.63} \end{align}where $\overline{\sigma}(x) = \Big( \frac{\sum_{k=1}^n ( x_k - \overline{\mu} (x))^2}{n} \Big)^{1/2} $.

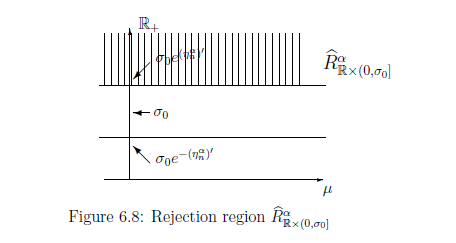

Thus, in a similar way of Remark 6.10( in $\S$6.3), we see that ${\widehat R}_{{\mathbb R} \times (0, \sigma_0]}^{\alpha}$="the slash part in Figure 6.8", where

\begin{align} {\widehat R}_{{\mathbb R} \times (0, \sigma_0]}^{\alpha} = \{ (\mu , \overline{\sigma}(x) ) \in {\mathbb R} \times {\mathbb R}_+ \;:\; \; {\sigma_0} e^{(\eta^\alpha_{n})' } \le \overline{\sigma}(x) \} \tag{6.64} \end{align}